Apple moving to ARM CPUs for MacBooks : implications for Windows, Linux, and desktop computing

Apple has announced that it will be moving its line of MacBook laptops from Intel-based CPUs to ARM-based CPUs. While this does not have any immediate impact on the Windows or Linux desktop computing markets, Apple’s move validates the platform and may give Microsoft an incentive to again experiment with Windows on ARM (RIP Windows RT 2012-2015).

This has implications for Linux desktop computing, which relies upon the availability of Windows-on-Intel “Wintel” hardware. The transition to ARM CPUs on Mac and Windows hardware may be accompanied by bootloader lockdown and the inability to install unsigned binaries or other operating systems.

Since a Mac person may have found this blog post by searching for “Mac” and “ARM” I should address their concerns first

For the 80% of consumers using 20% of software features, the Mac experience will not change.

No doubt, Adobe’s Creative Suite applications like Photoshop, and Document Cloud applications like Acrobat, will be ported and available on the first day the MacBook on ARM goes on sale. I would expect that the open source movement will embrace the platform and have applications like VLC and Tunnelblick ready as well. Some specialized applications will not be available right away.

There will be disappointing performance using Rosetta 2 or other Intel-to-ARM CPU emulators.

Advanced Mac consumers will likely keep at least 1 Intel-based Mac for power and compatibility for the first year or 2 of the transition, to run the Mac applications that are not yet available for the native ARM.

Advanced Mac consumers will find that Parallels is less effective in virtualizing Windows, as it will be emulating the Intel CPU at the same time as it hosts the guest operating system. Boot Camp, which allowed multiple-boot to Windows in the past, will not be available, even when Windows on ARM is again available.

Advanced Mac consumers will likely keep at least 1 Intel-based Windows computer for compatibility as they lose the ability to run Windows in emulation or multiple-boot.

Software piracy is likely to be more difficult, due to a lack of Mac-on-ARM software available to pirate, and may be blocked altogether by App store controls and binary signing.

Market conditions are different from 2015

Market conditions and customer expectations are different from 2015. The inability to install 3rd-party software directly without using an app store intermediary was a deal-breaker in 2015. Now, 12 years after the introduction of the iPhone, customers are comfortable using app stores to access software. It may not be a coincidence that Windows 10 now has a relatively usable App store with free-as-in-beer and sometime even free-as-in-Libre apps like VLC.

The App store can be used to stop piracy

This will have profound effects on the Mac desktop ecosystem going forward, and may validate a similar path to be taken by Microsoft going forward. If Apple is able to transition half of its unit shipments to ARM (I predict that Mac will retain Intel models at the high end for performance and binary compatibility for a few years), then Microsoft will follow.

Open source communities like Linux and BSD rely upon hardware from the Wintel hardware ecosystem.

Desktop Linux needs Wintel hardware. There are hobbyist solutions based on ARM, like the Raspberry Pi and the Pinebook, and these platforms get better with every release. The software is there: both Red Hat (Fedora) and Canonical (Ubuntu) now support AARCH64 ARM CPUs. However the performance is not there, the hardware tuning is not there. We need to encourage Pi and Pine, because they may be all we have in 10 years.

Linux was almost blocked from Wintel hardware in 2008 with the UEFI bootloader

In 2008, there was concern about viruses that would infect the master boot record (MBR). A solution called unified extensible firmware interface (UEFI) essentially locked the computer so that it could only be formatted by an installer that had a certificate issued to a software publisher for a fee. Whether intentional or not, this had the potential to prevent open operating systems like Linux from installing on this new generation of Wintel hardware. A technical and political solution was found, and the UEFI threat to open source software was neutralized.

Android hardware is a vision of this possible future

Android telephones are essentially Linux-on-ARM computers. Most of them have bootloaders that are “locked” and will only allow software with a specific digital signature to be installed on the phone’s hardware. There are some phones that are easier to “unlock” than others.

When Windows RT came out in 2012, it did not allow “unsigned binaries.” the only software you could install was via the RT app store. When the Mac introduced an app store, it initially set the default to off, allowing the installation of unsigned binaries. Later versions set this value to on by default, requiring consumers to find the option and disable it before “unsigned” software was permitted on the computer.

Consumers have been trained by the app stores on iOS and Android

Now that consumers have been trained to accept app stores as the intermediary between their computer and the software they wish to install, it is not hard to imagine a future where the app store is all that is left. To protect consumers from security threats, Apple (and later Windows) may use app-store-on-ARM to eliminate piracy, while carefully cultivating the sense of openness by allowing open source apps into the app stores, as is the case with iOS and Android.

What happens if the Windows-on-ARM hardware has a locked bootloader?

Losing the ability to install desktop Linux on Mac hardware is not a big deal, in numerical terms. From a Linux hacker’s point of view, the issue will be Wintel and its eventual replacement Windows-on-ARM (“WinARM.”)

The server hardware market will accommodate Linux-on-ARM. But what about the desktop and tablet hardware markets?

Let’s imagine the following hypothetical timeline:

2020. Macbook-on-ARM released to market, does not suck. Software available via app store only, no sideload of unsigned binaries, locked bootloader, like an iPhone or most Android phones.

2021. Windows-on-ARM released to market, does not suck. Software available via app store only, no sideload of unsigned binaries, locked bootloader, like an iPhone or most Android phones.

Linux hackers will be able to use Windows-on-Intel junk for 5 years

For the first 5 years, this will not be a problem in practical terms. Linux hackers will be able to find and reformat used Windows-on-Intel hardware. But after that?

Back to the Raspberry Pi and Pinebook

This brings us back to the Raspberry Pi, and the Pinebook. We have to hope that these projects succeed. They may be the only hardware platform we will have left, on which to install free-as-in-Libre software.

Desktop Linux needs Microsoft Office and Adobe Photoshop

I know of a professional firm that uses a Linux server to store documents for a network of Mac users. The server has a screen, keyboard, and mouse at a desk in the photocopier room.

Linux is a weird kind of Mac

The office manager was asked to sit at console, enter a password, and check connectivity using the web browser. When the office manager saw a desktop with Chrome and TeamViewer, she seemed to relax: “oh, it’s a weird kind of Mac.”

Desktop computer market share 2013 vs 2020

Let’s take a look at the desktop computer market 2013 vs 2020:

2013

Windows: 90%

MacOS: 8%

Linux: 1%

2020

Windows: 78%

MacOS: 17%

Linux: 2%

(source: https://www.statista.com/statistics/218089/global-market-share-of-windows-7/)

12% of the desktop computer market has moved away from Windows

Desktop computing has not fundamentally changed for the past 7 years. But due to problems with system stability and security challenges, 12% of the desktop computer market has moved away from Windows. 9% of the market switched to MacOS. 1% of the market switched to Linux.

Windows on Intel, UNIX on Intel

At the time of this writing, Windows, MacOS, and Linux desktop computers mostly use Intel-compatible CPUs. MacOS and Linux are both essentially UNIX-on-Intel computers, with roughly the same performance and security advantages relative to Windows running on similar hardware. As our friend the office manager put it, “Linux is a weird kind of Mac.”

Applications are brand names

Our friend the office manager recognized a few brand names: Chrome and TeamViewer. Their presence validated the platform. They allowed her to consider the Linux desktop as a viable alternative to her Mac.

Some standard applications are already present on the Linux desktop

On the Linux desktop on which I am writing this post, I have Chrome, Filezilla, VNC, VLC, TeamViewer, Zoom, and Teams. These same applications can be installed on Windows and MacOS computers.

The Linux desktop does not have Microsoft Office or Adobe Photoshop

At the time of this writing, Microsoft Office and Adobe Photoshop are not available for the Linux desktop.

Work-alike replacements are not valid in the eyes of consumers

This post is being written in LibreOffice Writer, which is open source software that tries to reproduce the Microsoft Office suite. Like Pages for the Mac, it does a competent job of reading and writing word processing files. But it is not Microsoft Office 365. Same for Photoshop: there are many image editing programs, but they are not the brand name, and really not the same thing. Word processing might be one thing, but aside from the simplest spreadsheets and presentations, Excel and PowerPoint are not really replaceable.

Macs have Office and Photoshop

Macs are relevant to consumers because they can run Microsoft Office 365 and Adobe Photoshop. They may not be able to run every accounting or engineering program available for Windows, but having Office and Photoshop covers most needs. That is why when Windows lost 12% of of the entire market, MacOS was able to capitalize on the opportunity to capture the consumers making a change, but Linux was not.

If Linux had Microsoft Office 365 and Adobe Photoshop

If Linux had Microsoft Office 365 and Adobe Photoshop, we would see the emergence of a second strong UNIX on Intel desktop computer platform. Linux, the “weird kind of Mac,” would get more interest, more relevance, and more market share.

BigBlueButton et Greenlight : serveur vidéconférence et gestion d’usagers et de sessions présentation Linux Meetup

Wireguard présentation Linux Meetup

Las Vegas Helicopter ride

Taffee, a cat, scratching at a window

The shell

In the early 1990s, during the glory days of UNIX culture, being able to score a telnet window, a shell account on a UNIX server, was a big deal.

Back then, a combination of borrowed credentials, academic accounts, and commercial providers hosted the UNIX shell accounts, that provided finger and talk and FTP and pine mail and usenet readers and IRC.

UNIX culture, what remains of it, has been subsumed into the Linux server culture, which itself is being eaten by cloud and devops. But one thing that remains, for those who want it: the shell. I remember deploying a Linux server 20 years ago — it was non-trivial and required the re-purposing of Wintel metal. That choice remains (a tiny netbook running Linux is like having a tiny mainframe with its own UPS and console), but other choices, like $5 per month cloud servers and VMWare Player guest instances and raspberry pi servers make the shell available to anyone who wants it.

We do not realize just how lucky we are.

De-clouding: hosting virtual servers on-premises to reduce hosting telecom burn

Enterprises that have a significant monthly cloud bill should business-case an approach that uses the cloud for public-facing assets but considers on-promises virtual hosting with no incremental telecom increase. A local deployment of a set of virtual servers can be done in a Linux or Windows context. Depending on hardware, platform, and workload, an on-premises server should be able to host between 1 and 7 virtual server guests.

Most server deployments are now virtual. Aside from edge cases that rely on raw horsepower or low latency, like file servers and voice over ip servers, baremetal rarely wins the business case.

The flexibility and benefits of virtualization have led to practices and tools that require multiple versions of a server image, for devops or redundancy, and powerful automation tools that can script the creation, orchestration, and destruction of virtual servers as needed.

There are also network effect reasons why for a small business to be left with an AWS account by a web developer is not such a bad thing.

However, even enterprises in the 20 employee range will accumulate a number of server processes, most hosted on public cloud services, which will each incur recurring monthly fees. Some of those enterprises would save money by bringing the processes on-premises and in-house.

There are business cases that make sense for the cloud. The web site should live in the cloud. As a related example, though, the web site’s backup can be hosted on a local server connected to the on-premises DSL line.

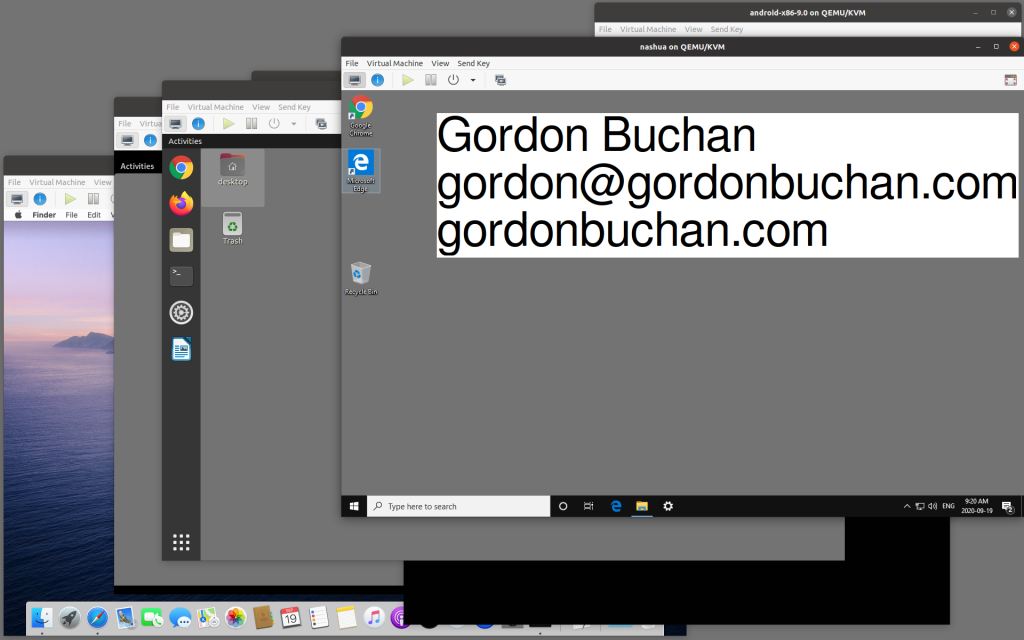

For Linux workloads, virt-manager with KVM and Qemu is a good combination — Boxes leverages this toolset as well.

A hybrid approach, typically with the web marketing server as well as email and calendar services on public clouds, but with backoffice, ERP, database, and backup operations performed in virtual servers hosted in on-premises equipment, at a lower cost than the equivalent service from an asset hosted externally by a vendor. Of course, this comes with the responsibility for an offsite backup and disaster recovery plan. Start with 2 hard drives, and take one offsite each week. then get fancier, maybe with another on-premises server at another campus.

Systems can even be hybrid, with a public-facing website on a cloud service mounting cheap assets stored on an on-premises server.

For Windows, some shops use VMware quite effectively, especially with its server and management tools. However I would suggest a strong look at Windows Hyper-V, which does just as well hosting Linux guests as it does Windows guests, and fits into a corporate environment, nicely.

In the same big company type-theme, The Azure AD cloud deserves a look. Microsoft has shown a vision of the future in which the cloud acts to orchestrate a mix of cloud and on-premises assets with common active directory.

By considering where the public cloud adds value to a server deployment, and finding savings by bringing some virtual server workloads back on-premises and in-house, enterprises can achieve significant savings that can be re-purposed to other priorities.

(Almost) off the grid

Sitting on the deck in front of a lake in the Laurentians north of Montreal, I find myself almost off the grid. There is no cell phone coverage for about 20KM before the driveway, so no 3G wifi hotspot. A rural data wireless provider with antennas on mountaintops usually provides a decent wifi connection, but a power surge destroyed the base station of a radio, and here I find myself reduced to my last 2 lines of communication: satellite TV and an old-school voice landline.

Yes, I did make a dialup connection over the landline during last week: it was 24Kbps, slow even by dialup standards, and modern web pages, even those optimized for lower-speed connections like the HTML version of Gmail, are completely unusable.

Colleagues are covering for technical support responsibilities in civilization, and my brother will drive me this afternoon to the community center, 7KM away. Until then, I find myself myself essentially cut off: no WhatsApp texts, no checking for latest headlines, weather, or trivia, no streaming audio for my airpods.

So here I am typing on a computer in offline mode, to be pasted to the Internet later today. This reminds me of a project I have put off several times: a complete offline web development environment. Hosting a LAMP server is trivially easy, whether on the baremetal of a Linux laptop, or as a vm guest on a Windows laptop, but one must take precautions to be productive offline: I need to install a local copy of the php.net documentation, and I have found some interface code that must be redone to invoke local copies of JavaScript libraries, rather than pulling them in from remote locations at run time.

People tell me that I will benefit from being “unplugged,” that it will relax me. They are mistaken, although I will survive until Monday morning when I return to the city, sustained this afternoon by a half hour of the community center’s free wifi. The rural data wireless base station will be replaced at some point, I hope soon – I will be back in the city on Monday morning, but my Mom spends the summer up here – I hope for her that she will soon get wifi for her iPad.

By the way, here at the community center: wifi is awesome, never take it for granted.